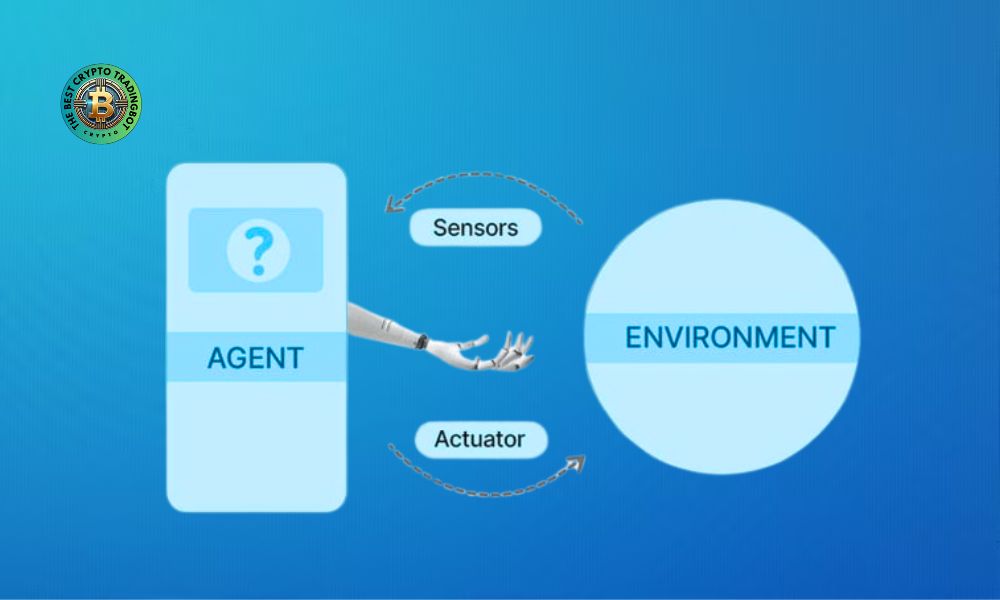

Environment in AI agent is a foundational concept, shaping how an artificial intelligence agent interacts and learns from its surroundings. It encompasses all external factors the agent can perceive through sensors and act upon via actuators, thereby performing its assigned tasks and achieving its goals.

Contents

What is Environment in AI agent?

Environment in AI agent, or the environment of an AI agent, is understood as the entire external world with which the agent interacts. This is the space where the agent gathers information, makes decisions, and executes actions. The environment provides “states” and receives “actions” from the agent. In return, the environment provides the agent with “percepts” through sensors and can change its state based on the agent’s actions.

For example, for an autonomous vacuum cleaner robot:

- Agent: The vacuum cleaner robot.

- Environment in AI agent: The floor, furniture, walls, dirt, obstacle locations.

- Sensors: Camera, collision sensors, infrared dust detection sensors.

- Actuators: Wheels, suction motor, brushes.

The relationship between the agent and the environment in AI agent is a continuous loop: the agent perceives the environment, processes information, decides on an action, performs the action, and the environment responds, creating a new state that the agent continues to perceive.

Key characteristics of the Environment in AI agent

Understanding the characteristics of the environment in AI agent is crucial as it directly influences the choice of agent architecture and appropriate algorithms. Here are some key characteristics:

Fully observable vs. Partially observable:

- Fully: If the agent’s sensors can provide it with complete access to the state of the environment at all times, then the environment is fully observable. Example: A chessboard (the agent knows the position of all pieces).

- Partially: If the agent can only access partial information about the environmental state, it’s a partially observable environment. Example: A self-driving car cannot know the intentions of other drivers or hidden obstacles. Most real-world environment in AI agent instances fall into this category.

Deterministic vs. Stochastic:

- Deterministic: If the next state of the environment is completely determined by the current state and the action performed by the agent, the environment is deterministic. Example: A simple puzzle game with no random elements.

- Stochastic: If there is uncertainty or randomness in how the environment changes or in the outcome of actions, the environment is stochastic. Example: A robot moving on uneven terrain might slip, or the outcome of rolling dice.

Episodic vs. Sequential:

Episodic: The agent’s experience is divided into discrete “episodes.” In each episode, the agent perceives and then performs a single action. Actions in one episode do not affect subsequent episodes. Example: An image classification system, where each image is an episode.

Sequential: The agent’s current decision can affect all future decisions. Actions have long-term consequences. Example: Driving a car, playing chess. This is a more common type of environment.

Static vs. Dynamic:

Static: The environment does not change while the agent is “thinking” or making a decision. Example: A crossword puzzle.

Dynamic: The environment can change independently of the agent, even when the agent is not acting. The agent needs to keep up with these changes. Example: Street traffic, stock market.

Discrete vs. Continuous:

- Discrete: The state of the environment, time, percepts, and actions of the agent have a finite, distinct set of values. Example: Playing chess (the number of squares and pieces is finite).

- Continuous: The state, time, percepts, or actions can take values within a continuous range. Example: Temperature control, driving (velocity, steering angle).

Single-agent vs. Multi-agent:

- Single-agent: The agent operates alone in the environment.

- Multi-agent: Multiple agents interact in the same environment. These agents can be cooperative, competitive, or both. Example: Robot soccer, trading bots in a market. Analyzing the environment in AI agent in this case is more complex due to accounting for other agents’ behavior.

The importance of defining the Environment in AI agent

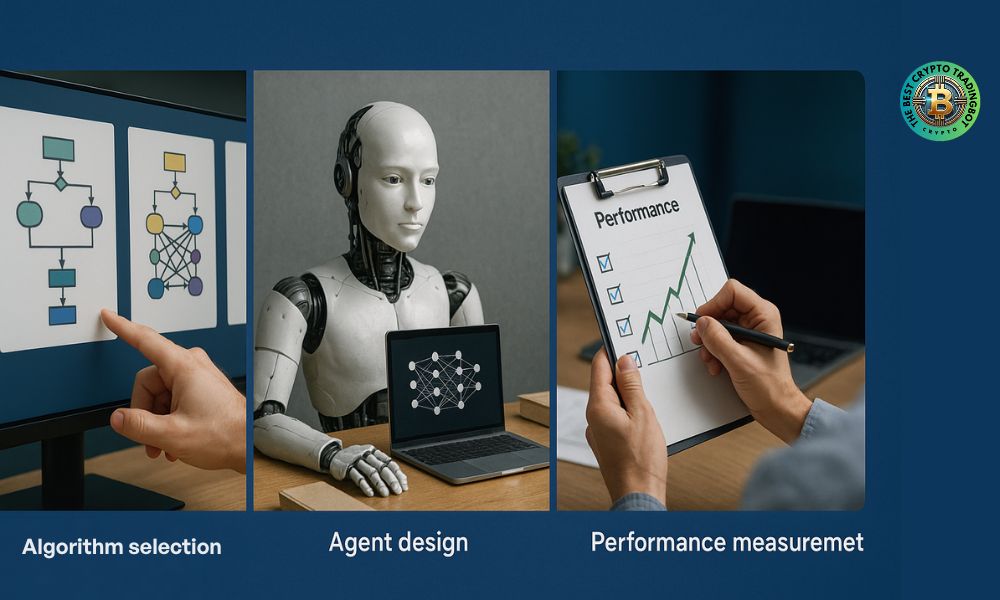

Accurately defining the characteristics of the environment is the first and most crucial step in designing an effective AI agent.

Algorithm selection: Different algorithms are suited to different types of environments. For example, reinforcement learning algorithms are often used in sequential and stochastic environments.

Agent design: The agent’s architecture (e.g., simple reflex agent, model-based agent, goal-based agent, utility-based agent) heavily depends on the complexity and characteristics of the environment.

Performance measurement: The agent’s performance measure must be clearly defined within the context of the specific environment in which it operates.

A clear understanding of the “environment in AI agent” not only helps developers create smarter AI systems but also ensures they operate safely and effectively in real-world applications, from simple virtual assistants to complex autonomous systems.

The environment in AI agent is a key factor, serving as the stage and interactive partner for every artificial intelligence agent. Understanding and accurately describing this environment helps optimize AI design and performance. For more exciting insights into AI and technology, follow The Bsst Crypto TradingBot!